AI has been glorified as the future of automation, often portrayed as the ultimate solution for efficiency, decision-making, and innovation across industries. It has been marketed as an all-encompassing technology capable of transforming everything from healthcare and finance to autonomous systems and industrial processes.

In practice, this narrative does not match reality, as AI in its current form is too limited to be relied upon for mission-critical applications. While it has demonstrated some success in controlled settings, it struggles to adapt to real-world complexities and unpredictability. While tech giants celebrate cloud-trained AI models, these solutions typically fail spectacularly when deployed in dynamic, unpredictable environments. This is because AI lacks commonsense reasoning and struggles with real-world subtlety, i.e. it doesn’t understand the real world in the same way that humans do. It is typically trained on synthetic or limited datasets, which fail to fully capture the diverse and complex scenarios it is expected to handle. As a result, AI systems often misinterpret context, leading to unreliable or misleading outcomes in unpredictable operating environments.

The Consequences of AI’s Limitations

The lack of common sense of how the physical world works and limited training data is a fundamental limitation of AI systems. This can lead to costly failures, false predictions, and in worst cases, complete system breakdowns—making them unsuitable for environments where precision and reliability are paramount.

Another significant limitation, particularly for large-scale models like LLMs, is that AI models require powerful computing resources, making them inefficient for real-time, low-power edge applications. That being said, advancements in Nvidia’s latest chipsets, such as the Jetson Orin series are certainly helping bridge this gap by providing high-performance, power-efficient AI processing directly on edge devices.

While these new chipsets allow AI models to run locally and reduce reliance on cloud computing, AI in general still faces challenges such as excessive power consumption compared to deterministic DSP algorithm-based solutions, reliance on limited datasets, and a lack of explainability. These factors make AI unsuitable for industries requiring strict regulatory compliance and safety. While some smaller AI models can be optimised for edge deployment, many modern AI architectures remain computationally expensive and impractical for real-time, low-power edge applications.

Furthermore, most ML models rely on generalised feature extraction algorithms (mean, standard deviation, kurtosis, correlation etc) and are trained on limited, often unrealistic datasets. AI’s reasoning is entirely data-driven, meaning that we still don’t fully understand how the models work, making them very different from traditional DSP algorithms that use a mathematical recipe or a set of predefined rules. When faced with new, unseen conditions, AI often produces inaccurate or misleading results. In contrast, RTEI (Real-time Edge Intelligence) leverages DSP algorithms that are based on science, making them scientifically accurate and reliable in complex, real-world applications.

The AI Illusion: Why Traditional AI Fails at the Edge

Cloud-based AI solutions dominate today’s landscape because they require vast computational resources to function effectively. However, when deployed on edge devices with limited power and processing capacity, AI’s inefficiencies become apparent.

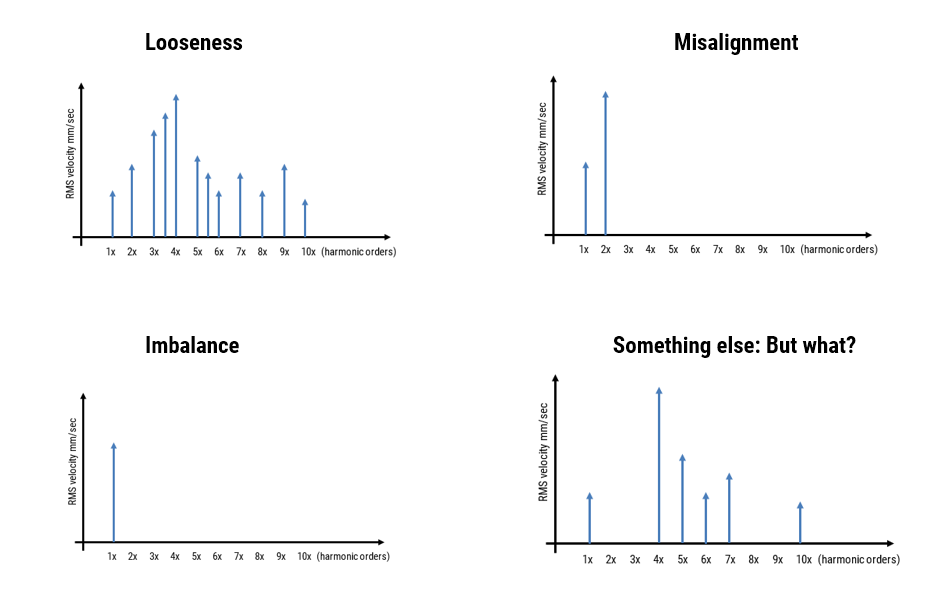

Predictive maintenance in industrial environments serves as a pertinent example. AI-based solutions are often promoted as game-changers, yet they struggle with a fundamental issue: the lack of real-world failure data. Most foremen and factory managers will not allow researchers to deliberately break machines for data collection, leading to AI models trained on synthetic or limited failure cases. As a consequence, this creates significant gaps in understanding of the normal and abnormal behaviour of the machine or process, leading to potential misdiagnoses and operational inefficiencies.

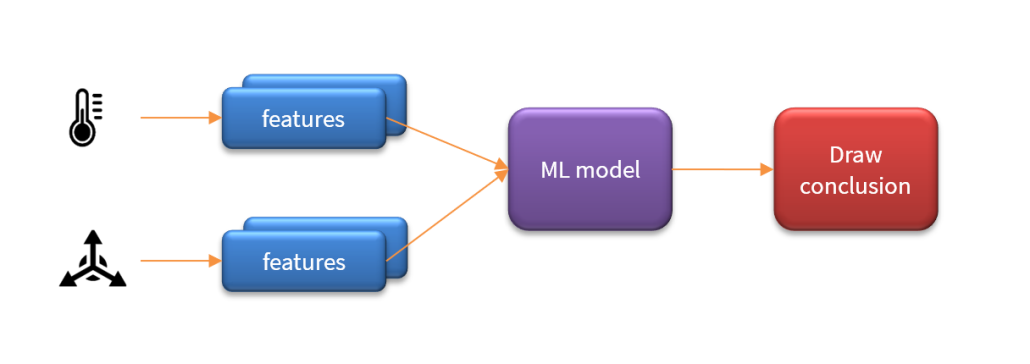

A more effective approach—Real-Time Edge Intelligence (RTEI)—combines DSP algorithms for feature extraction with ML models for classification. For example, in vibration analysis, DSP-based techniques can be used to generate harmonic fingerprints of velocity and displacement (feature extraction), which can be used to detect anomalies before they lead to system failure. These fingerprints or features are then fed into ML models for fault classification. This hybrid approach ensures accuracy and robustness, as DSP algorithms rely on physics and engineering principles (e.g. Fourier analysis, Kalman filtering) rather than data-driven learning.

RTEI: The Future of Edge Intelligence

RTEI (Real-time Edge Intelligence) represents a fundamental shift in AI for edge computing. By integrating real-time DSP algorithms with ML models, RTEI enhances accuracy, reliability, and computational efficiency. Unlike traditional AI, which operates on probabilistic reasoning, RTEI leverages fundamental scientific principles, making it more predictable and suited for mission-critical applications like autonomous vehicles, industrial automation, and medical diagnostics, where any misjudgements could have catastrophic consequences.

For Edge IoT to reach its true potential, intelligence must be embedded directly into devices. We are already seeing promising advancements—industrial-grade vibration analysis systems using real-time DSP algorithms to detect early signs of mechanical failure, and aircraft autopilot systems that rely on deterministic control algorithms rather than AI, ensuring mission-critical reliability in aviation, and self-driving cars that utilize LiDAR, cameras and other sensors to navigate autonomously without solely depending on AI-based decision-making. These systems prioritise reliability through scientifically proven methods rather than speculative, data-driven predictions.

As AI’s reasoning is fundamentally different to that of traditional DSP algorithms, a key point to realise here is that unlike DSP algorithms based on predefined rules and mathematical concepts (i.e. designed with human intelligence), how the AI reaches its result remains an enigma, and is the primary reason why they shouldn’t be allowed to operate without any scrutiny on critical processes.

RTEI enhances the overall solution by adapting its feature extraction algorithms to real-world variations, ensuring consistently high-quality data for AI classification. For example, when measuring analog sensor data using an ADC, temperature variations in the instrumentation electronics would cause the sampling rate to slightly vary. This variation would lead to a mismatch between the ideal model and real-world signal tendered to the classifier. As such, a conventional AI model would struggle, as these variations were probably not taken into account during the model’s training phase.

This is where DSP algorithms, such as a method that analyses timestamps or a Kalman filter can shine, as sampling rate variation can be taken into account in the estimation model. As such the DSP estimation algorithm can estimate the signal’s sampling rate in real-time and use this estimate to perform other operations required for the feature extraction operation. This ensures that only high-quality features are provided to the classifier in varying temperature environments—a very realistic scenario! Finally, it should be noted that this approach has the added advantage of requiring less ML training data, which expedites development and lowers project costs.

The Role of Arm Processors in RTEI

A major driving force behind this revolution is the Arm-based processor ecosystem. Unlike traditional cloud-based AI solutions, Arm Cortex processors (including the newer Arm Helium processors) provide a power-efficient way to run edge-optimized AI models in real-time. These processors are already at the core of smart sensors, embedded systems, and industrial automation, ensuring that AI-powered IoT devices can process and react instantly to changes in their environments.

Lockstep Processor Technology for Mission-Critical Systems

A fundamental aspect of mission-critical systems is Lockstep processor technology, which ensures redundancy and fault tolerance in real-time applications. Processors such as the Arm Cortex-R4 and Cortex-R5 are designed with lockstep functionality, where two identical processors run the same instructions simultaneously and compare results.

Lockstep technology is particularly useful for detecting hardware faults, which can arise from environmental influences, component ageing and external interference. For example, bit flips in memory can occur due to electromagnetic interference (EMI) from industrial machinery or power supply fluctuations, corrupting data memory and leading to algorithmic errors.

A key concept for dual-processor Lockstep processing is that it detects discrepancies but does not determine which processor is correct. Since both processors execute the same software, software bugs will appear identically on both, making lockstep ineffective for detecting programming errors. For true fault tolerance and error correction, a Triple Modular Redundancy (TMR) approach is often used, where three processors execute the same software, and a majority vote determines the correct outcome either per cycle or function.

ASIL Compliance and Automotive Safety

Lockstep technology is essential for Automotive Safety Integrity Level (ASIL) compliance, ensuring that automotive safety systems can detect and handle processor faults. For example, in an adaptive cruise control system, if a processor mismatch occurs, the system disengages cruise control rather than making an unsafe decision. This prioritisation of passenger safety over continuous operation is crucial for mission-critical applications.

For systems requiring the highest levels of reliability—such as flight control systems or nuclear power plants—TMR is employed to prevent a single fault from compromising the entire system.

Neoverse: High-Performance Edge Computing

Arm’s Neoverse architecture is a high-performance processor family designed for data centres, edge computing, and cloud infrastructure. Unlike Cortex processors, which are commonly used in mobile and embedded applications, Neoverse is optimized for scalability, power efficiency, and AI acceleration, making it well-suited for high-performance edge computing and is an interesting alternative to Nvidia’s Jetson Orin GPU series.

With advancements in Arm Cortex (especially Helium) and Neoverse architectures, developers can now deploy real-time AI workloads directly on edge devices, eliminating cloud dependencies. This means better security, reduced costs, and instant decision-making, all of which are essential for next-generation IoT applications.

Key takeway: The Evolution from AI to RTEI

Rather than dismissing AI’s role in edge applications, RTEI represents the next evolutionary step—one that acknowledges AI’s limitations and enhances its capabilities through deterministic DSP algorithms. Traditional AI struggles to generalize beyond pre-trained scenarios, lacks commonsense reasoning, and remains a black box in decision-making. These weaknesses make it ill-suited for dynamic, real-world applications.

The time has come to move beyond the assumption that cloud-trained AI can work everywhere. Instead, RTEI offers a hybrid intelligence system—combining the strengths of AI and DSP for real-time, reliable, and efficient edge intelligence.

By embedding intelligence directly into edge devices using Arm processors, lockstep technology, and deterministic DSP algorithms, we can build smarter, safer and more adaptable systems.

Leave a Reply

Want to join the discussion?Feel free to contribute!